- It’s amazing how quick the fastest processors execute instructions, so why don’t we use the fastest thing all the time?

- We list the reasons why using the best and most advanced technology rarely makes sense.

- Some reasons you already know (financials), but others you may have overlooked.

Picture this: your fridge, your watch, even your toaster – all running on the absolute fastest processor ever made.

Sounds amazing, right? Like something straight out of a sci-fi movie. But as I started digging into why we don’t actually do this, I realized it’s way more complicated (and interesting!) than just “faster is better.”

CONTENTS

- Understanding processor (CPU) performance

- The tyranny of physics: heat and power

- Battery life matters most when it comes to portable devices

- Diminishing returns: the cost of every added megahertz

- Form factor constraints: size, weight, and materials

- Specialized vs. general-purpose processors

- Software and workload realities

- Reliability and longevity increase if you’re not pushing chips too far

- Regulatory and environmental considerations

- The user experience imperative

- Fast enough CPUs is often preferred to the “fastest” processors

- Summing all up

Understanding processor (CPU) performance

At its core, a processor’s job is to execute instructions as efficiently as possible. In theory, a faster processor with a higher clock speed and more cores sounds like the ideal component to power all our devices. However, raw speed is only one piece of the puzzle. Performance is measured not only by how many operations per second can be carried out but also by how efficiently those operations are performed under real-world conditions.

For instance, a processor designed for high-end desktop computers might excel in heavy multitasking and intensive applications. But if you swapped that processor into a mobile device, you might encounter issues that outweigh its performance benefits.

The gap between theoretical maximum speeds and pragmatic, sustainable performance is bridged by factors like energy efficiency, heat generation, and workload requirements. This nuanced approach to design helps explain why the fastest processors aren’t a one-size-fits-all solution. And that’s OK.

The tyranny of physics: heat and power

At the heart of semiconductor physics lies a fundamental trade-off: clock speed vs. heat dissipation. Every time a transistor switches, it consumes energy and generates heat. Ramp up the clock frequency, and the power needed grows roughly with the square of that frequency.

In a desktop tower with room for giant heat sinks and multiple fans, you can handle dozens, even hundreds of watts of thermal output.

But try stuffing that kind of thermal budget into a smartphone shell just a few millimeters thick, and you’ll end up holding a literal hot brick. Worse yet, excessive heat not only makes devices uncomfortable to touch but also accelerates wear on the silicon itself, shortening its operational lifespan.

Most of the time, product and chip designers must choose processors whose thermal design power (TDP) fits within tight thermal envelopes, balancing performance with reliable heat dissipation.

Too much heat not only impairs performance but can also reduce a component’s lifespan and compromise user safety. Consequently, designers often opt for processors that provide “good enough” performance without generating excessive heat.

This means that, while the fastest processors might exist in laboratory settings (read this as overclocking contests), they aren’t always the best fit for devices where space is at a premium and thermal management is a critical challenge. The trade-off here is a balancing act, optimizing performance while ensuring the device remains cool, safe, and comfortable to use.

Manufacturers have developed special architectures, like ARM’s big.LITTLEE configuration, where high-power cores are paired with energy-efficient cores. The system intelligently switches between these cores based on the computing demands, delivering a balance between high performance when needed and conserving battery life when tasks are less intensive. This kind of dynamic power management is key to modern mobile computing.

Battery life matters most when it comes to portable devices

Directly linked with thermal constraints is battery life. Modern users expect smartphones to last all day on a single charge, laptops to go eight hours or more, and wearables to run for days or weeks.

High-speed processors gulp power, often hundreds of watts when running near their maximum clock. In contrast, a well-engineered mobile SoC typically budgets less than 5 W in heavy use to reach that “all-day” promise, often sipping just a few millivolts under typical, less strenuous use scenarios.

Without those compromises, your phone would either die in an hour or need a battery half as thick and heavy as the current one. That’s hardly a winning trade-off. Engineering powerful yet efficient processors is no small feat.

Diminishing returns: the cost of every added megahertz

Imagine you’re at a car dealership, and prices escalate steeply as you tick up top speed by 1 mph. The analogy holds true for silicon: pushing clock speeds from 4.0 GHz to 4.5 GHz might cost only a small change in manufacturing, but moving from 5.0 GHz to 5.5 GHz often requires significant design changes, higher-grade silicon (binning for chips that can handle more voltage and heat), and more rigorous testing.

Each additional megahertz beyond a certain point yields smaller real-world speedups, especially in applications that can’t fully utilize raw single-thread performance. Meanwhile, the additional engineering risk, higher defect rates, and increased fabrication costs eat into both manufacturer margins and end-user price points.

We all want the fastest CPU *that we can afford. And that asterisk makes a big difference.

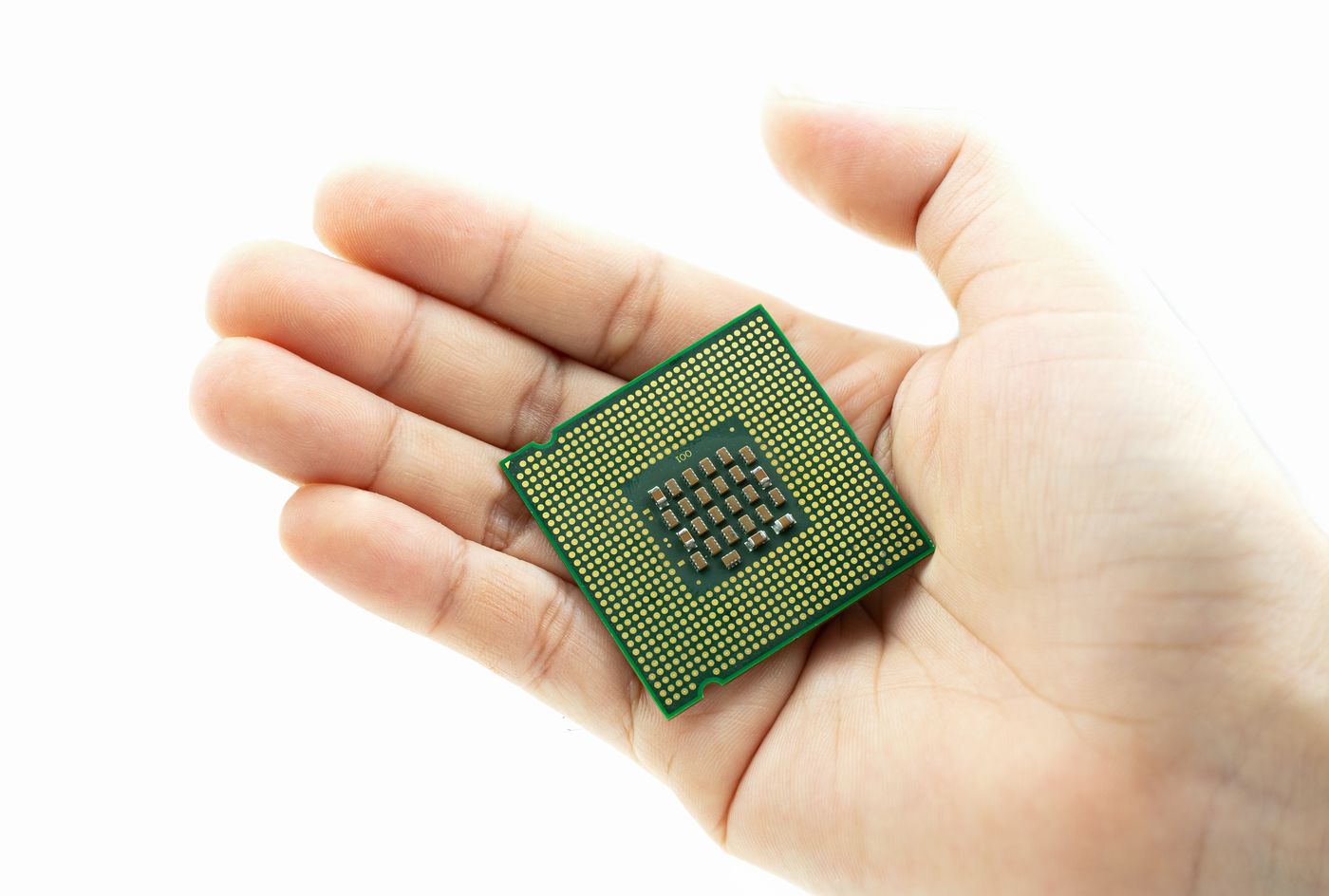

Form factor constraints: size, weight, and materials

Beyond the silicon itself, your device’s chassis, heat sink materials, and cooling solutions impose limits. A smartphone uses thin copper heat spreaders and relies partly on the metal frame and glass back to radiate heat. A gaming laptop might include vapor chambers and fans but still can’t match the cooling capacity of a desktop’s airflow-optimized case.

Wearables and IoT gadgets further shrink the envelope: there’s often no room for active cooling at all. Designers must make trade-offs between raw compute muscle and the physical realities of the device’s shape, weight, and build materials.

More often than not, the size and weight of a device determines what CPUs you can use. And using something beefier is not brave, but more often a bad design decision.

Specialized vs. general-purpose processors

In many domains, the bleeding edge in “speed” comes from specialization. GPUs, for example, dedicate hundreds or thousands of smaller cores, plus wide SIMD units and high-bandwidth memory, to tasks like graphics rendering or scientific computing. Google’s TPUs and other AI accelerators add matrix multiplication engines to speed up deep learning workloads.

But those architectures are optimized for narrow, very specific tasks, they excel at parallel floating-point math but aren’t as flexible for general computing.

Embedding such specialized silicon in every device would be overkill (and wasteful) for everyday tasks like web browsing or email. Instead, devices use “just right” processors that balance general-purpose capability with task-specific accelerators (e.g., video decoding, AI inference) to boost efficiency where it matters.

Software and workload realities

Raw clock speed is only part of the performance story. Modern processors rely heavily on cache hierarchies, branch prediction, out-of-order execution, and multi-threading to keep pipelines fed with data.

In many real-world applications, particularly those with lots of pointer chasing, irregular memory access, or heavy I/O, higher clock rates deliver only modest gains.

Developers and system architects often find that optimizing software for data locality, parallelism, and algorithmic efficiency yields far better returns than chasing every extra few hundred megahertz of hardware speed. In effect, many devices cap CPU performance where the “software curve” starts flattening, choosing instead to fine-tune compilers, libraries, and runtime systems.

So yes, it also matters how you define “fastest”, because depending on the workload you may be looking at completely different things.

Reliability and longevity increase if you’re not pushing chips too far

Servers and mission-critical systems sometimes use processors that run at slightly lower clocks than their maximum bin, precisely to improve reliability. Running chips at reduced voltage/frequency points (a technique called undervolting/underclocking) can significantly cut leakage currents and thermal stress, extending component lifespan and reducing the probability of silent data corruption or sudden failures.

Consumer electronics companies care deeply about return rates, warranty costs, and brand reputation. It’s often preferable to guarantee that every unit will survive typical user abuse, extended gaming sessions, warm ambient temperatures, dust exposure, than to push every chip to its absolute limit.

Regulatory and environmental considerations

As public concern over energy consumption and e-waste grows, regulators and industry consortia increasingly push for greener electronics. Standards bodies like Energy Star set power-efficiency benchmarks for devices, incentivizing manufacturers to hit lower idle and load-power numbers. Corporate sustainability goals further encourage designs that minimize carbon footprint over the device’s life cycle.

In this context, using the fastest possible processor in every device, regardless of whether that performance is actually needed, runs counter to broader environmental objectives. Better, more efficient silicon, adaptive power management, and longer device lifespans all take priority over peak clock speed.

The user experience imperative

Ultimately, most consumers care less about raw CPU clock speed and more about responsiveness, battery life, heat, noise, and overall feel. A phone that’s slightly slower in benchmark scores but stays cool and lasts 16 hours of heavy use will feel far more premium than one that races through tests but shuts down after two hours or becomes an uncomfortably hot pocket heater.

Manufacturers spend countless hours on UX testing, balancing CPU speed, GPU performance, and system-level optimizations to deliver a consistently smooth experience under real-world conditions.

That often means dialing back the processor’s top clock rate to hit a sweet spot where performance feels snappy, thermals stay in check, and battery life exceeds expectations.

Fast enough CPUs is often preferred to the “fastest” processors

The good news is that “fast enough” today often feels “plenty fast” tomorrow. Chip designers are increasingly turning to heterogeneous architecture, combining big, power-hungry cores with smaller efficiency cores, plus dedicated AI, graphics, and video engines, to match the right hardware to the right task.

This lets devices scale up performance when needed and coast at ultra-low power when idle. Adaptive hardware that dynamically reconfigures on the fly, silicon photonics for interconnects, and 3D-stacked chiplets promise even greater flexibility.

Rather than one monolithic “fastest” processor, tomorrow’s devices may weave together a plethora of specialized engines, each excelling at its niche, delivering overall performance that outstrips any single “big core.”

Want to find out what fastest means for you? Read our articles on how many CPU cores you need for gaming today and for running power hungry apps.

Summing all up

So, why don’t we plaster every device with the fastest possible processors? Because “fastest” often means hotter, power-hungry, costlier, shorter-lived, and less environmentally friendly, traits that clash with the real-world needs of mobile, embedded, and consumer electronics.

Instead, the industry strives for balance: matching silicon capabilities to use cases, optimizing software, and innovating in packaging and power management to deliver the best overall experience.

In the end, the goal isn’t a pointless race for megahertz, it’s crafting devices that delight users with smooth performance, long battery life, comfortable thermals, and affordable prices. And that is why “biggest” and “fastest” CPUs are glorious in supercomputers and flagship desktops, but unnecessary (and undesirable) in your everyday lives.